Kafka is an open-source publish/subscribe platform. It can act as a message broker and as a fault-tolerant storage system. Its speed and reliability make it a natural choice for managing communications between enterprise applications, so being able to monitor your Kafka systems is critical.

In this tutorial, we’ll see how easy it is to connect your Kafka logs to Papertrail™ so you can take advantage of its ability to store, index, and instrument your messaging logs.

Prerequisites

If you want to follow this tutorial, you’ll need a Kafka message broker and a Papertrail account.

Kafka

If you don’t already have Kafka up and running, you can follow their quickstart and install it on a local system. We’ll send a few test messages to verify that logging is working, so you need nothing more than a working server. Ensure that it has Internet connectivity to connect to the Papertrail servers.

Papertrail Account

If you don’t have a Papertrail login yet, you can create a free account. The free 30 day trial offers 16 GB for the first month 50/MB per month, which is more than enough for this tutorial.

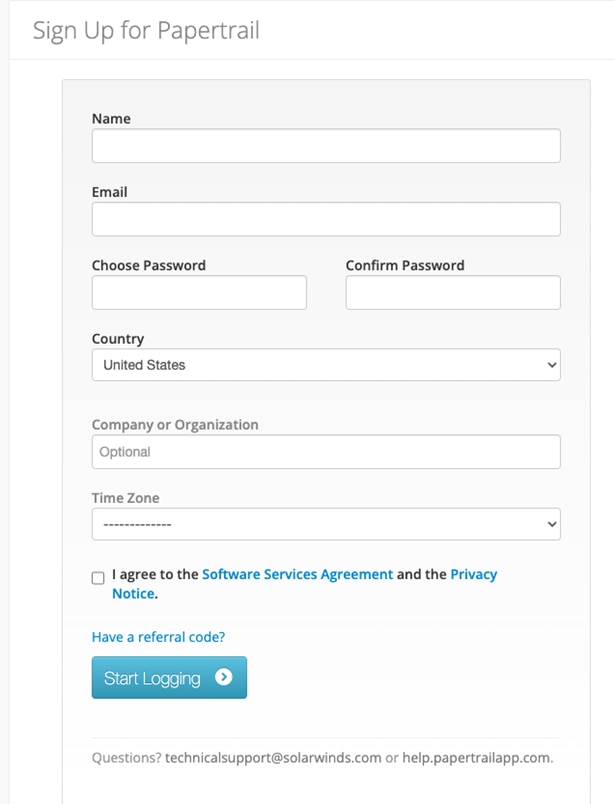

Sign up for an account here.

Then, fill out the new account form and click Start Logging.

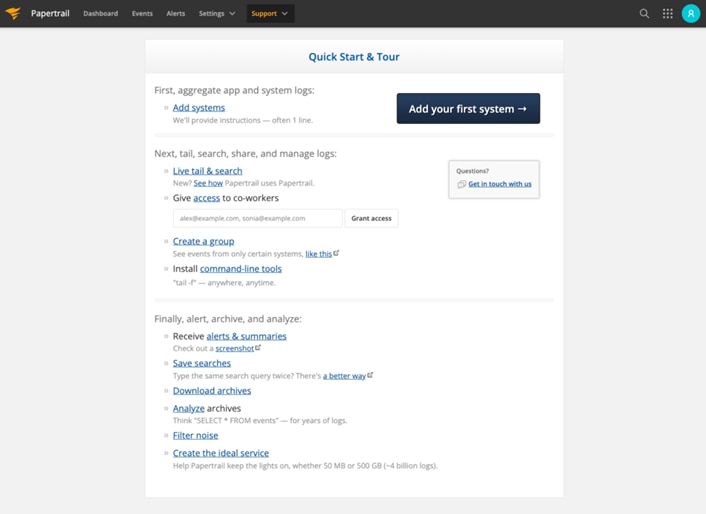

This will take you to your Papertrail dashboard.

The Quick Start and Tour page in Papertrail will guide you through adding a log source, searching logs, and setting up alerts. For this walk through, we’ll just skip this and configure a destination for the Kafka logs.

Exporting Kafka Logs to Papertrail

Kafka is a Java application and uses Apache’s Log4j 1.2.X for server logging. Apache deprecated this version of log4j in 2015, and its most significant limitation for our purposes is that it doesn’t support secure sockets. So, when forwarding your logs directly from Kafka to Papertrail, they aren’t encrypted. We’ll discuss this further below.

Despite this limitation, log4j makes exporting logs to Papertrail a straightforward process because it’s a sophisticated logging framework with a flexible architecture.

Application developers use a simple interface to create a logging object and use it to publish messages. The framework manages the messages based on configuration, not application code. So you can decide how to handle messages at runtime. The framework gives you complete control over which messages it logs and where it will log them. You can even send messages to more than one destination.

Log4j calls its output destinations Appenders. The framework comes with appenders for the console, files, remote sockets, remote syslog daemons, and various other destinations. Kafka even has an appender for sending logs as pub/sub messages.

Papertrail can act like a remote syslog daemon, so we don’t need a custom appender to export logs to it. We’ll use the remote syslog appender and tell it to route messages to Papertrail for indexing.

Let’s get started!

Create a Papertrail Destination

First, we need to create a Papertrail destination for our Kafka logs.

In Papertrail, a log destination is a network endpoint that accepts messages via HTTPS or TCP/UDP. The TCP/UDP protocol is traditional UNIX syslog messaging.

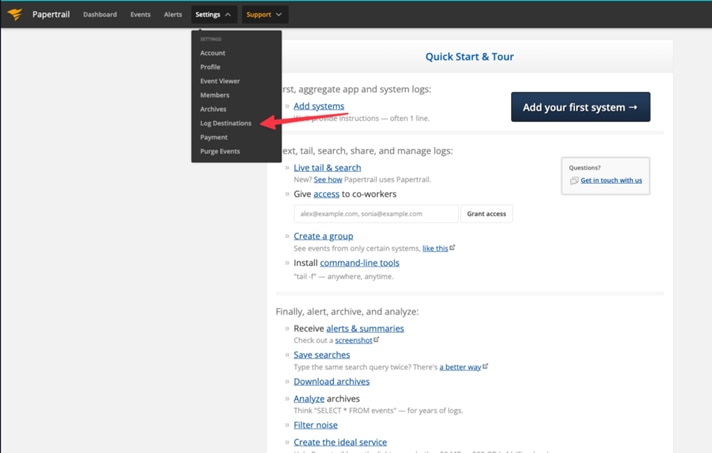

Click on the Settings menu in your Papertrail dashboard.

Select the Log Destinations option. This will take you to the new destination page.

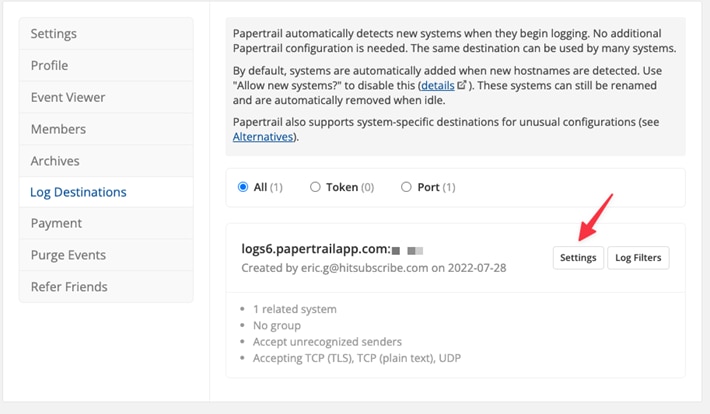

Your new destination has a hostname and a port. (I greyed out the port in this image.)

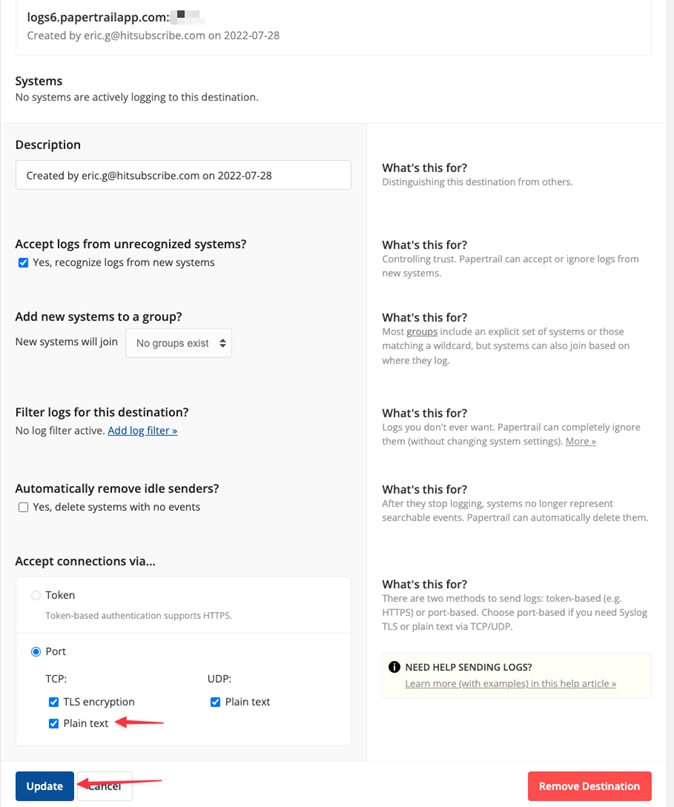

Before we can send plain text traffic to it, we need to make one more change. Click the settings button.

Click the Plain text checkbox near the bottom of the page, then click Update to save your change.

Configure Log4J

Now that we have a destination for the logs, we can configure log4j with a syslog appender.

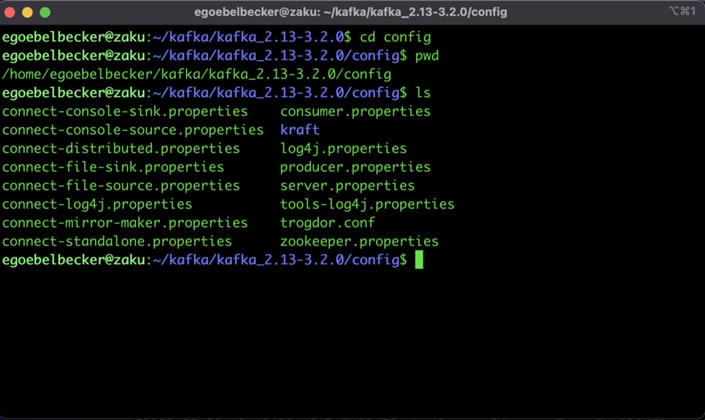

Open a shell and move to the config directory in your Kafka installation directory.

You’ll find several properties files in this directory. Kafka uses log4j.properties for logging, so we’ll change that file.

This excerpt from near the top contains the parts we’re interested in:

# Unspecified loggers and loggers with additivity=true output to server.log and stdout

# Note that INFO only applies to unspecified loggers, the log level of the child logger is used otherwise

log4j.rootLogger=INFO, stdout, kafkaAppender

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=[%d] %p %m (%c)%n

log4j.appender.kafkaAppender=org.apache.log4j.DailyRollingFileAppender

log4j.appender.kafkaAppender.DatePattern='.'yyyy-MM-dd-HH

log4j.appender.kafkaAppender.File=${kafka.logs.dir}/server.log

log4j.appender.kafkaAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.kafkaAppender.layout.ConversionPattern=[%d] %p %m (%c)%n

As the comment says, line #3 in the snippet (it’s around line #18 in the actual file) sets the default logging behavior: messages with a priority of INFO or higher will go to two appenders: stdout and kafkaAppender.

The next two stanzas define the two appenders. One goes to the console and the other to a file named server.log in the logs directory.

We want to add a new appender that will send messages to Papertrail.

So, add this to the file immediately under line #3:

This defines an appender named papertrailAppender.

Let’s go over the configuration:

- First, we define the papertrailAppender name and associate it with the SyslogAppender class.

- Then we set the log priority to INFO for the appender.

- Next, we specify the Papertrail host information.

- The next few lines specify the logging facility, which classifies messages, disables the default message header and specifies the log formatting

Before delving into the log formatting, let’s send some messages to Papertrail to ensure this works.

So, add this appender to the default logging configuration. Head back to line #3 and add the appender to the list:

# Unspecified loggers and loggers with additivity=true output to server.log and stdout

# Note that INFO only applies to unspecified loggers, the log level of the child logger is used otherwise

log4j.rootLogger=INFO, stdout, kafkaAppender, papertrailAppender

Now log messages will go to the console, server.log, and Papertrail. Depending on your needs, you can remove either of the former once Papertrail works.

Testing Kafka Logs in Papertrail

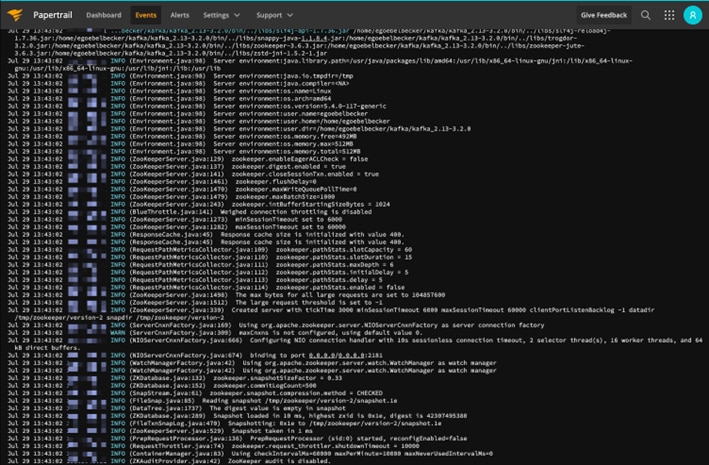

With this configuration, log4j should send Zookeeper logs to Papertrail. Let’s try.

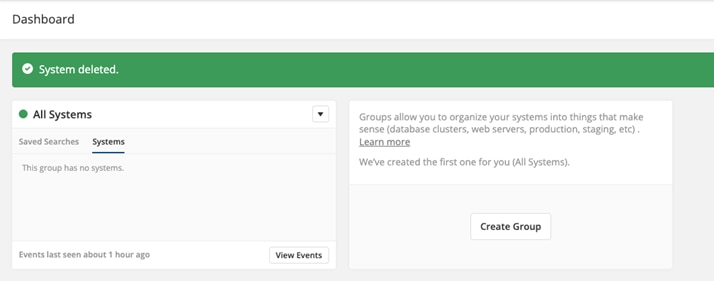

If you’re using a new account, your dashboard is empty:

If not, you’ll see one or more systems listed.

Let’s start Zookeeper and see what happens:

$ bin/zookeeper-server-start.sh config/zookeeper.properties

[2022-07-29 13:43:02,037] INFO Reading configuration from: config/zookeeper.properties (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2022-07-29 13:43:02,039] WARN config/zookeeper.properties is relative. Prepend ./ to indicate that you're sure! (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2022-07-29 13:43:02,041] INFO clientPortAddress is 0.0.0.0:2181 (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2022-07-29 13:43:02,041] INFO secureClientPort is not set (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2022-07-29 13:43:02,041] INFO observerMasterPort is not set (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2022-07-29 13:43:02,042] INFO metricsProvider.className is org.apache.zookeeper.metrics.impl.DefaultMetricsProvider (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2022-07-29 13:43:02,043] INFO autopurge.snapRetainCount set to 3 (org.apache.zookeeper.server.DatadirCleanupManager)

[2022-07-29 13:43:02,043] INFO autopurge.purgeInterval set to 0 (org.apache.zookeeper.server.DatadirCleanupManager)

[2022-07-29 13:43:02,043] INFO Purge task is not scheduled. (org.apache.zookeeper.server.DatadirCleanupManager)

[2022-07-29 13:43:02,043] WARN Either no config or no quorum defined in config, running in standalone mode (org.apache.zookeeper.server.quorum.QuorumPeerMain)

[2022-07-29 13:43:02,044] INFO Log4j 1.2 jmx support not found; jmx disabled. (org.apache.zookeeper.jmx.ManagedUtil)

[2022-07-29 13:43:02,044] INFO Reading configuration from: config/zookeeper.properties (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2022-07-29 13:43:02,044] WARN config/zookeeper.properties is relative. Prepend ./ to indicate that you're sure! (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2022-07-29 13:43:02,044] INFO clientPortAddress is 0.0.0.0:2181 (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2022-07-29 13:43:02,045] INFO secureClientPort is not set (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2022-07-29 13:43:02,045] INFO observerMasterPort is not set (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

(more logs)

With the console appender still configured, we see a few screens full of messages. We should see the same messages on Papertrail.

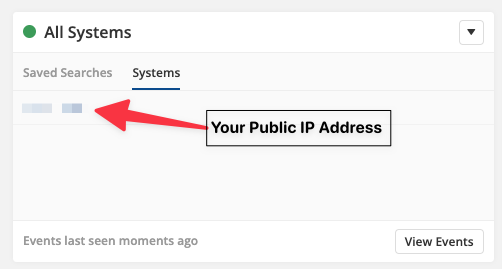

Check your dashboard, and you should see a new system:

Click on the IP address, and Papertrail will take you to your logs:

And there are the logs. You’ll see the same message set as you saw on the console.

Let’s wrap up by tweaking the message formatting.

Adjust Log Formatting

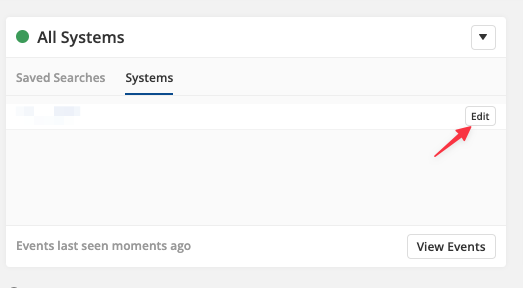

Before we adjust the log messages, let’s look at the Papertrail configuration.

Our system is showing up as an IP address, which isn’t helpful. Let’s give it a name.

Go back to your dashboard and mouse over the system name:

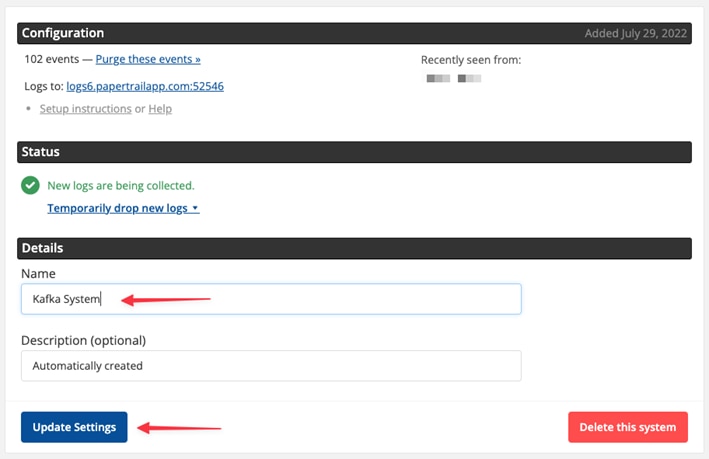

This brings up the edit button. Click it to view your system’s properties:

Enter a new name and click Update Settings.

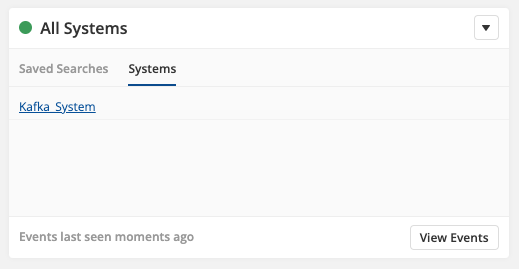

Much better!

Next, let’s look at the message contents.

Here’s one of the messages:

Jul 29 15:21:30 XXX.XX.X.XX INFO (Environment.java:98) Server environment:java.compiler=<NA>Syslog supplied the date and time; the rest came from this:

log4j.appender.kafkaAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.papertrailAppender.layout.conversionPattern=%p: (%F:%L) %x %m %n

PatternLayout use a formatting string. You can read more about how they work in the string above.

The string above specifies:

Priority: (Filename:Line Number) [thread context] [message] [line separator]

Let’s add the java class and method names:

log4j.appender.papertrailAppender.layout=org.apache.log4j.PatternLayout

log4j.appender.papertrailAppender.layout.conversionPattern=%p: (%l) %x %m

This string is shorter than the previous because %l gives us the class and method with the filename. We can also remove the line separator since Papertrail doesn’t need it.

Here’s what the messages look like:

Jul 29 15:41:36 Kafka_System INFO (org.apache.zookeeper.Environment.logEnv(Environment.java:98)) Server environment:java.compiler=<NA>That’s a lot more information without altering the application code.

Kafka and Papertrail

In this post, we saw how Kafka’s use of log4j 1.2.x makes it easy to send its log to Papertrail. With some simple configuration and a Papertrail destination, we added remote monitoring and observability to a Kafka server.

Get started with SolarWinds Papertrail centralized logging today!

This post was written by Eric Goebelbecker. Eric has worked in the financial markets in New York City for 25 years, developing infrastructure for market data and financial information exchange (FIX) protocol networks. He loves to talk about what makes teams effective (or not so effective!).

Looking for something more advanced? Check out the SolarWinds apache log analysis analyzer